Initial Thoughts on PDR - Building a Universal Radio Device Control Frontend Using Mobile Browsers

homebrew zh-CN qrp amateur radioInitial Thoughts on PDR - Building a Universal Radio Device Control Frontend Using Mobile Browsers

Overview

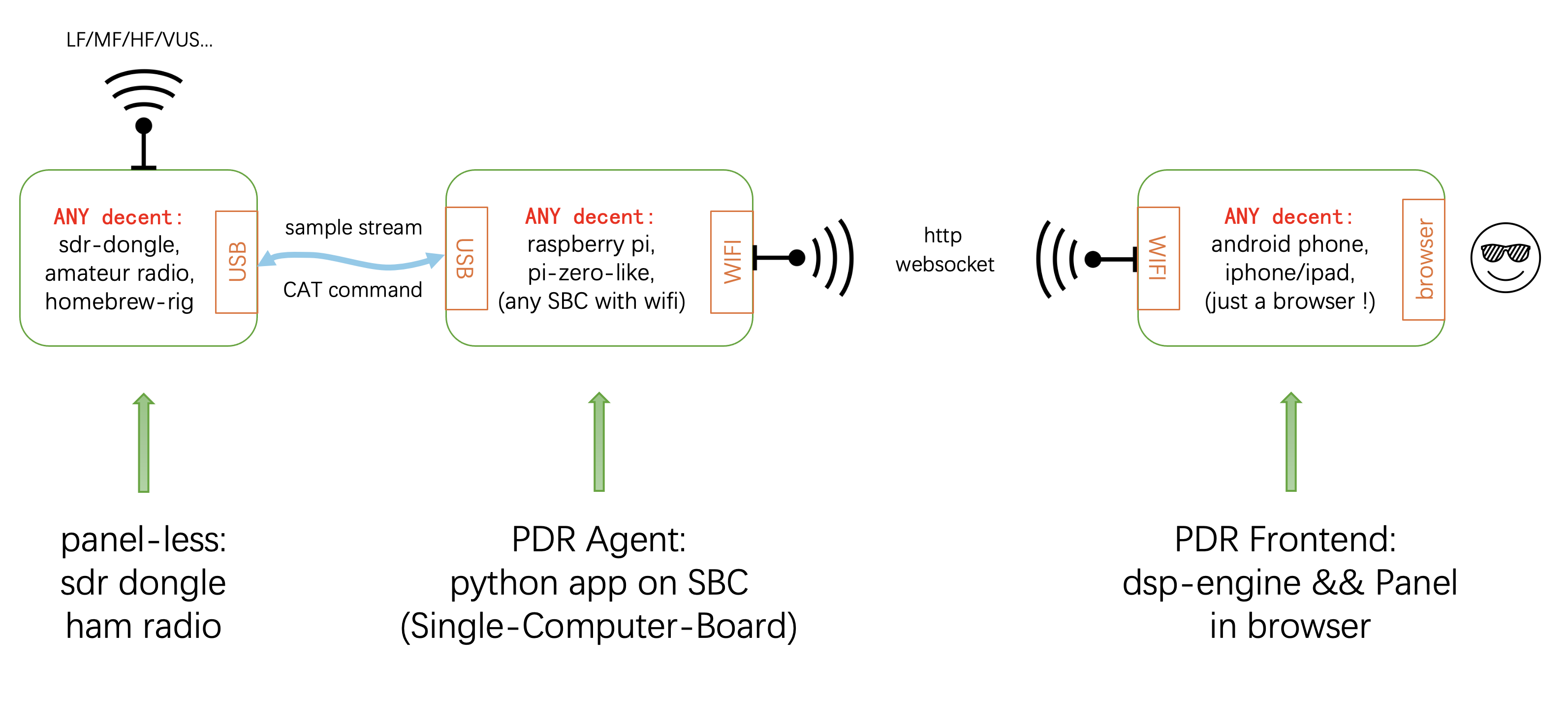

This article proposes a concept: using mobile browsers to build a universal ham device control frontend to enhance the convenience of radio operation, and also provide the possibility of eliminating the human-machine interaction part when making homemade devices (similar to rtl-sdr headless).

Motivation

I rarely go on full-scale QRP field operations; I usually set up during park walks with my kids or weekend outings, requiring quick setup and teardown, and the equipment needs to be portable. Often, a small bag holds everything (EFHW/fishing rod antenna, 50-1.5 feed line). Even the Icom-705 is too heavy for many occasions, so I have been looking for some small QRP devices (or homemade solutions) for a while. I found many good open-source projects, such as Hermes-Lite2, T41-EP, etc., but these are almost all base station solutions; QRP-Labs' QMX seems good, but I still hope to have a large waterfall spectrum, and its closed-source nature has slowed down the progress of its SSB functionality. Only some high-end commercial products like Elecraft combine spectrum and portability. For homemade QRP devices, spectrum, powerful expansion, and flexible control seem mutually exclusive.

I was thinking, in the era of SDR, with various chips and even SoCs being very advanced, the only hurdle for miniaturizing homemade devices seems to be the control panel: considering size, power consumption, and software/hardware complexity, these non-core things are troublesome. Current SDR solutions are all similar (ADC/DAC/microcontroller/Tayloe...), but the panel implementations are diverse, with various buttons, knobs, and even matrixes... Each device uses cutting-edge chips inside, but the casing looks like it belongs to the 19th century.

Look at the development of mobile phones. The densely packed keyboards of early BlackBerry and Nokia have long been eliminated; now, a single touch screen handles everything, simple and convenient, with high flexibility (software-defined touch functions). For homemade device authors, integrating a touch screen is not difficult in hardware, but developing the accompanying UI is too labor-intensive.

Devices like RTL-SDR sticks completely hand over the interaction frontend to software, which has led to the emergence of many excellent SDR software in the community, but they are mostly based on desktop computers. Recently, there is a good thing called FT8CN, but first, it is only a protocol layer software, and second, it can only run on Android, so I have to carry two phones when I go out...

...Right, mobile phones. I suddenly thought: everyone carries a mobile phone, even on a SOTA summit. Why not use the phone screen as the device's control terminal? This would be very convenient and bring many benefits:

- Flexible functionality. Control is no longer locked to hardware buttons/knobs, software-defined, expandable, and updatable at any time.

- Large enough, clear enough, high-resolution waterfall display.

- Convenient. We can lie under a big tree, far away from the antenna/radio, and operate (my vertical fishing rod antenna no longer needs a ten-meter feed line to avoid my body interference...).

- For homemade devices, the entire control circuit can be omitted, reducing the device to the core BPF+preamp+RF receive/transmit+up/down convert frontend.

- ...Even omit the modulation/demodulation/codec/DSP part (the phone can do it!).

Moreover, the above requirements are almost universal and similar for every device, which can be extracted as an open-source project for public use!

But, is it feasible?

Feasibility Study

- First, we need to extract the I/Q data from each device. This interface is already prepared for modern SDR architecture commercial devices; for homemade devices, these are the raw data after baseband sampling, which is very convenient to output. ✅

- Secondly, the device needs to input and output its control channel. For commercial devices, CAT is essential; for homemade devices, it just adds a bit of complexity: integrating the microcontroller's logic to provide the control channel. ✅

- But how to connect to the phone? Bluetooth bandwidth is not enough, only WiFi. Some trendy devices (like my Icom-705, Xiegu 6100) already have WiFi interaction, but for other devices, especially homemade devices, integrating high-speed WiFi is a challenge. Not to mention 8-bit machines like USDX, even microcontrollers with STM32 cores like QMX are quite difficult. To achieve WiFi speeds above 100Mbps, you need to start with 5G WiFi chips and use more complex integrations like DMA+SDIO.

Initially, I was stuck on this idea.

...But then I thought, we could use a third-party system to bridge it. For example, a Raspberry Pi? USB---》WiFi!

- For commercial devices, the USB interface is relatively ready-made. For homemade devices, even many microcontrollers have PHY cores, integrating a USB (even 2.0) is just a matter of soldering an interface, and the USB software code is generally prepared in the chip manufacturer's SDK. ✅

- Everyone has a Raspberry Pi (or Orange Pi/Banana Pi...). These gadgets have USB, WiFi, can run Python, and have tremendous computing power, capable of high-speed WiFi data forwarding (WiFi speed depends on the Pi's performance)! ✅

- Okay, the phone can connect remotely. What about the app? Android is relatively easy, as you can install any application; but iOS is notoriously closed, with its development, listing, review, and release being very troublesome.

Stuck again? But we have the almighty web browser! We don't need native, installable apps; we need web apps (i.e., web pages)! ...But the web is a sandboxed environment, and JavaScript is a relatively low-performance scripting language. Running high-intensity algorithms, is this feasible?

- Playing sound on the web is not difficult (though it still requires some tricks); as for the microphone, we can use web-audio to utilize the phone's microphone. This solves the problem of speakers and handheld microphones. ✅

- If considering using the phone for DSP operations (modulation/demodulation, codec, digital protocol parsing, digital filters, etc.), JS efficiency is too low. But now there is WebAssembly, which can achieve assembly-level performance in the browser. Coupled with the computing power of modern phone CPUs/FPUs/NEON/SIMD, I think handling a few MHz of spectrum processing should not be a big problem! (Hopefully) ✅

- There's still the CW key issue... Of course, implementing it on the phone (letters to Morse) is simple, but for enthusiasts of traditional keys, it's better to plug it into the radio... Or make a simple piece of hardware to send the key through Bluetooth to the Raspberry Pi (the web's Bluetooth protocol is a draft and needs time)? Anyway, I'm not too concerned about this. ✅

Notice that all the hardware mentioned above is ready-made (radio USB, Raspberry Pi, phone), and we don't need to solder anything! The only thing missing is the software. Moreover, web development is much simpler than Verilog or embedded development, allowing more people to participate in the project!

Now, let me share my initial thoughts.

Architecture

- The only requirement for the device: a USB interface that can input and output I/Q samples and CAT. This includes but is not limited to various radios, SDR sticks, and homemade devices.

- Then you need a "Pi" with sufficient WiFi speed. The so-called "sufficient" depends on the sampling rate you need (spectrum bandwidth), the performance of the Pi's antenna, the distance between you and the Pi, obstructions, the Pi's computing performance, and other factors. But in any case, I guess for a spectrum starting from a few hundred kHz, even a small thing like the Raspberry Pi Zero 2 should be fine. Of course, if you want a 10MHz spectrum like the SDRplay RSP1, you might need a single-board computer starting from the Raspberry Pi 4B...

- For the phone, apart from performance (not too old), there are no other requirements.

Power the Pi with a battery, connect it to your radio (or SDR stick) with a USB cable, turn on the WiFi hotspot, open your phone, find some shade, and you can start CQing!

Agent Architecture

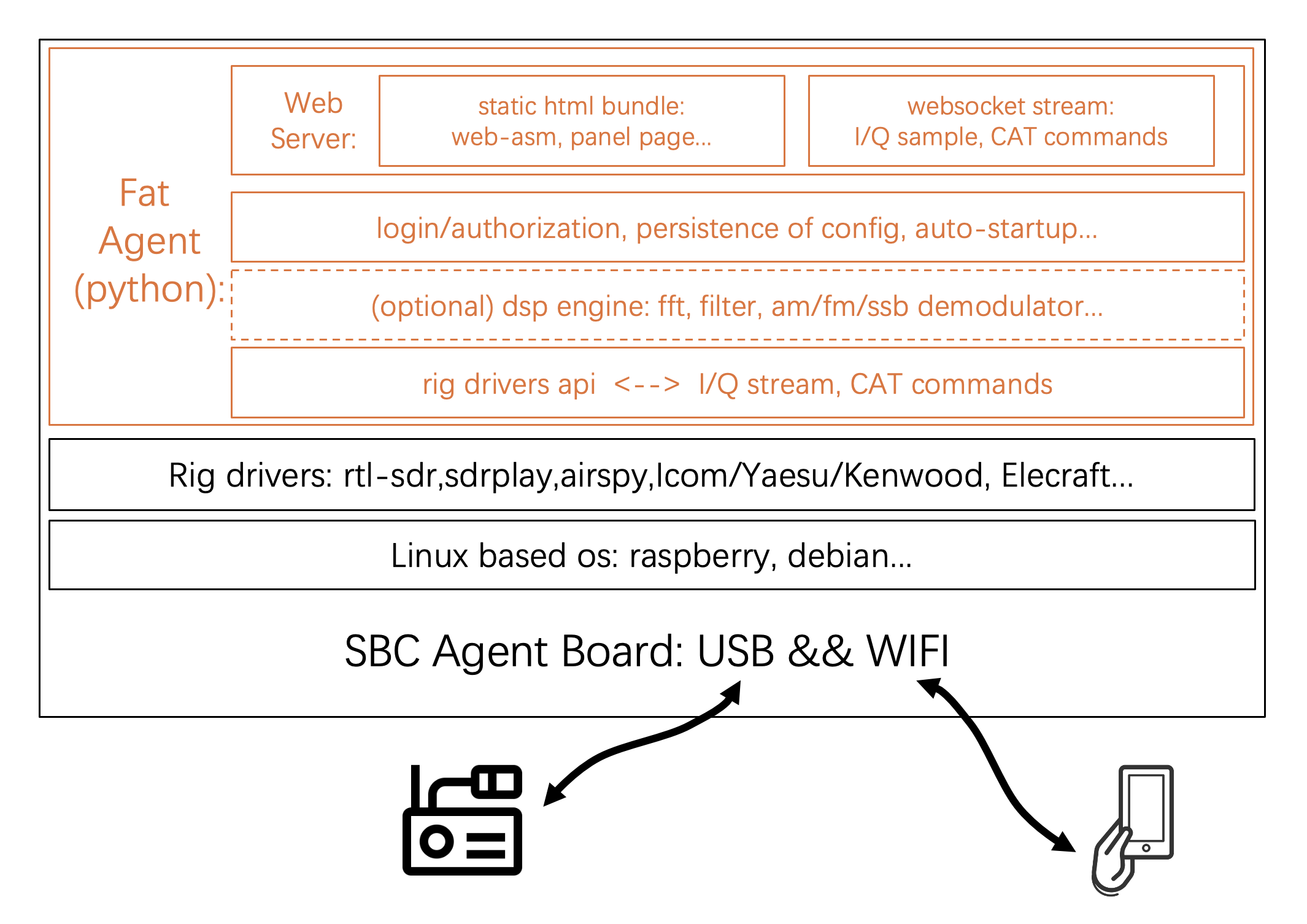

The agent solution based on single-board computers like the Raspberry Pi is actually simple to implement, and a Python script can handle it. I call it the "Fat Agent":

- Install the USB drivers for the SDR/radio device on the Pi.

- Then, in the script, you need to implement some glue code (vendor-specific) to extract the I/Q data stream, CAT control commands, etc.

- If your Pi has excess performance (or battery?), you can consider running DSP directly on it to process the signal chain. Libraries like csdr are already waiting for us...

- Or send the raw sample stream to the phone via a Python script for processing by the browser.

- The control panel pages opened by the phone browser are provided to the phone through Python's web server.

- That's basically it! (Maybe add some peripheral functions like login/authentication, user configuration storage, etc.)

Looking further into the future.

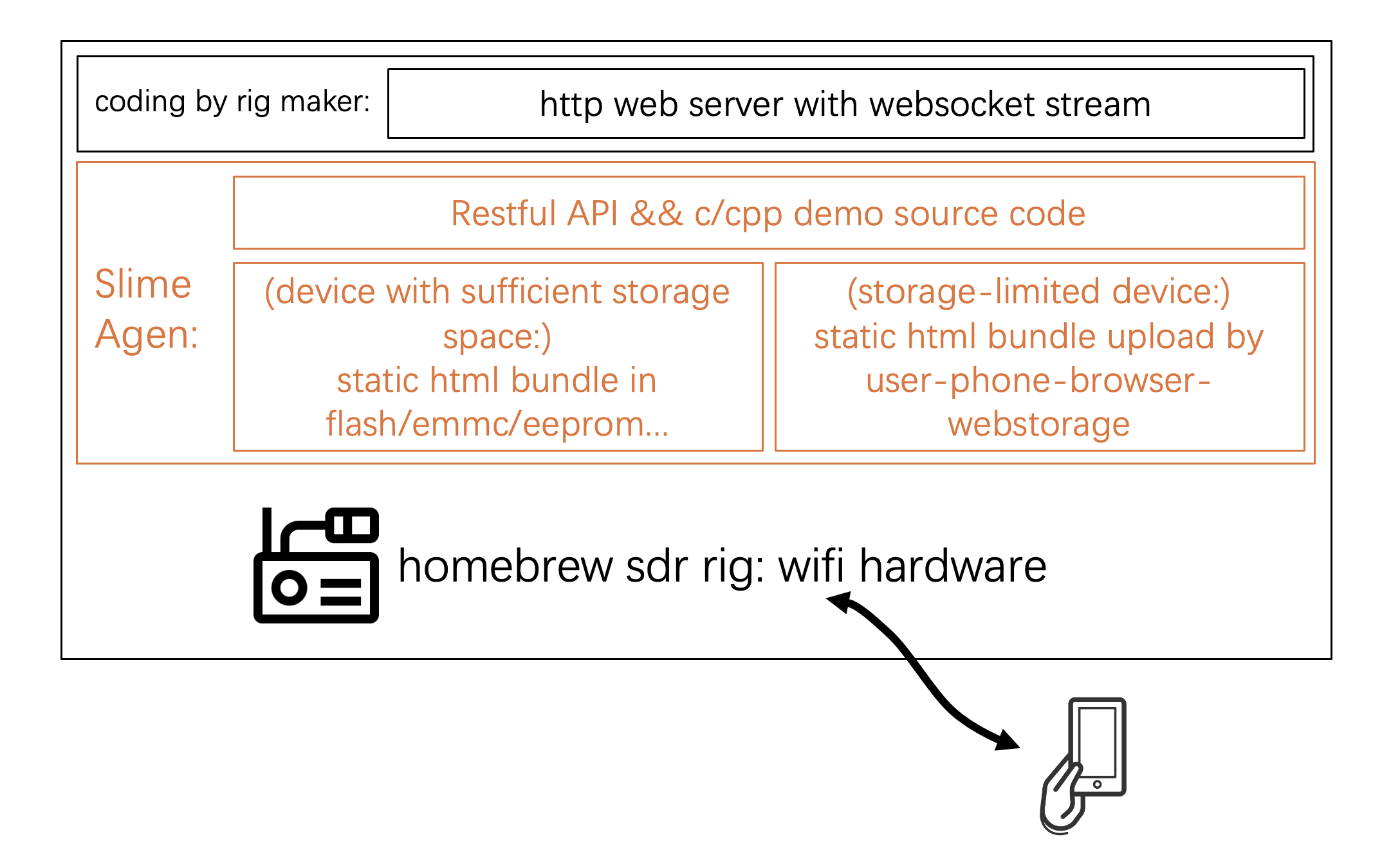

With such a web control panel on the phone, future homemade device authors can directly embed it into their homemade devices, eliminating the third-party agent (Raspberry Pi) solution. I call it the "Slim Agent":

- First, the premise is that your device can integrate a decent WiFi chip. Thanks to industrialization, this is not a big problem (except for a slight increase in cost). Now, WiFi SoC solutions are very mature and diverse, ranging from high-end to low-end, from WiFi 6 dedicated chips to microcontrollers like the ESP32 with ARM+WiFi integration.

- Implement the integration for the Slim Agent: if your microcontroller can run some RTOS (if it can run Linux, even better), the Slim Agent (hopefully!) can provide some libraries for you; at the very least, it can provide some demo code for you to implement the agent's API as a reference. Basically, you need to develop a basic web server that implements WebSocket.

- As for the glue code for software and hardware logic control, you need to implement it yourself.

- Burn the web frontend pages to your flash. If you can't spare a few megabytes of storage space, consider having these page files uploaded (once) through the user's mobile phone, relayed by the web server, and then sent back to the user's browser...

- Done! This way, you save all the speakers, microphones, jacks, audio amplifiers, LCD screens, all buttons/knobs... and related software development of your hardware!

- Moreover, you don't need a Raspberry Pi as an intermediary anymore!

Web Front-end

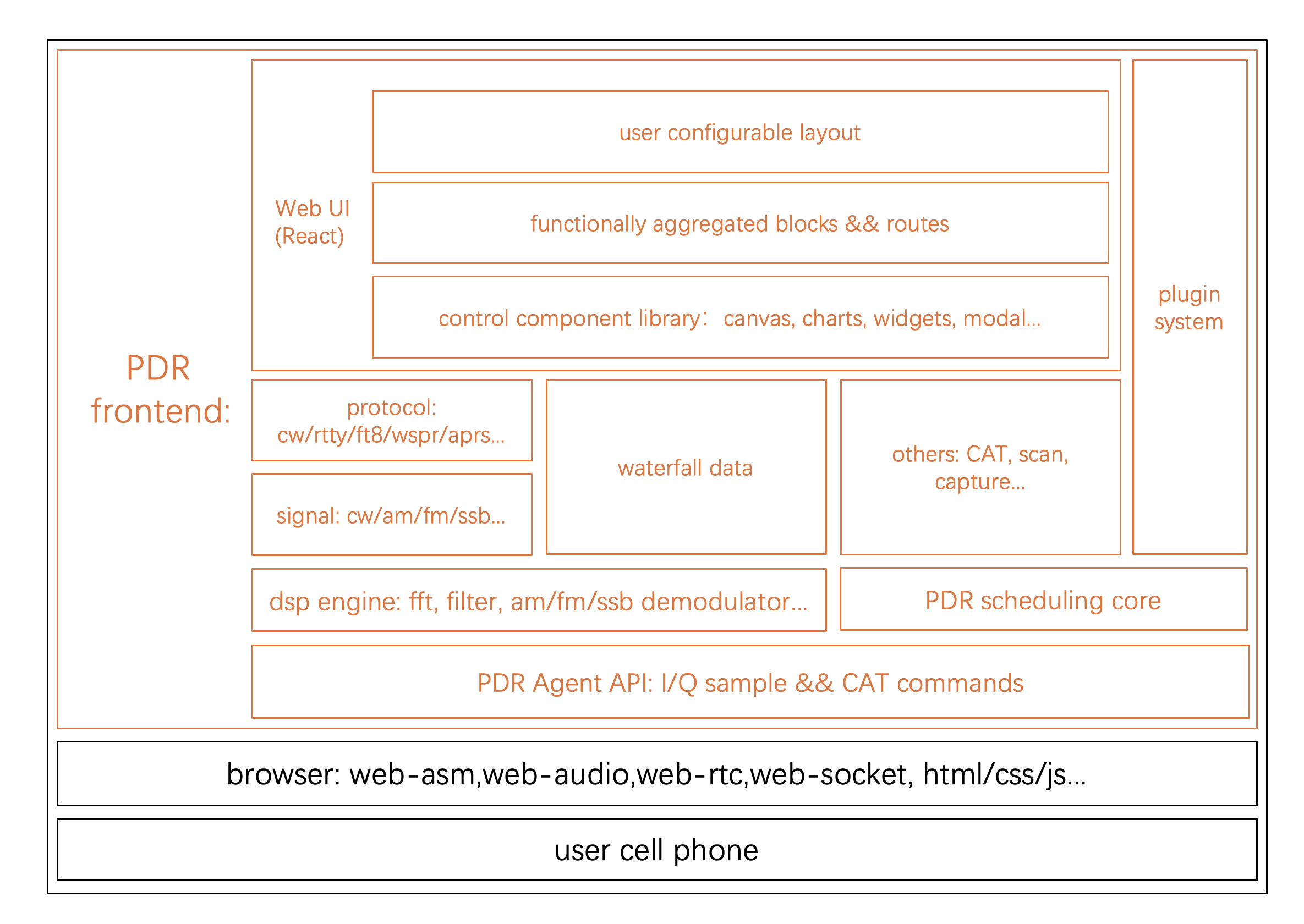

The bulk of the (envisioned) project development is in the web front-end part. If possible, I hope to make it an open system, not only the code itself is open, but more importantly, the system is open, allowing everyone to participate, contribute algorithms, functions, controls, plugins, or implement any interesting ideas!

- Thanks to the development of web technology, the most difficult parts have already been implemented at the underlying level by browser manufacturers: web-asm, web-audio, websocket...

- What we need to implement is, first of all, the API layer that communicates with the agent, which should be as general and fixed as possible.

- Then the DSP algorithm part. There are already many FFT-related and radio-related C/C++ codes, which require some time and effort to organize and compile into WebAssembly libraries for use.

- It may be necessary to implement a simple core (state machine/scheduler/engine/whatever...) to glue the vertical and horizontal components and architecture of the entire system together.

- ...and (hopefully later) provide an open plugin system.

- If there is more energy (contributors), implement some common protocols, such as the WSJT-X protocol cluster, various PSK31, APRS, RTTY, CW decoding, and other application layer functions.

- For the UI interface layer, I hope to achieve an open set of controls/components for everyone to develop and expand.

- Achieve a certain degree of interface layout freedom, allowing users to arrange it according to their preferences and commonly used functions.

How Far Are We from This Vision?

Well, it looks like there are still many things to design and develop. But, considering that there have been WebSDR/OpenSDR for many years, there are actually many references available. What is needed is to learn, refactor, and start coding...

Expected implementation steps:

- First, implement a demo system to validate feasibility by connecting the chain.

- Design the agent API and implement the Fat Agent.

- Compile and implement the DSP library based on WebAssembly.

- Implement the waterfall display and at least one protocol (FT8?).

- Design the UI control library system.

- Initially implement the panel application.

- Mid to late stages (after having a community or enough followers):

- Implement the Slim Agent system (waiting for some interested device DIY enthusiasts to drive it together).

- Implement more protocols and applications.

- Design and implement a plugin system.

- Iterate, keep iterating...

About the Name PDR

Phone-Defined-Radio!

I hope all this is valuable and that I can find time to verify and start it as soon as possible.

73

by bd8bzy